Table of Contents

What is DeepNude and Undress AI?

DeepNude and Undress AI are advanced artificial intelligence technologies that utilize deep learning algorithms to manipulate images, primarily those featuring clothed individuals. The technology aims to create a more realistic-looking version of the original image where the subject appears naked or undressed. This is achieved by analyzing various features within the original image and generating an output that closely resembles the appearance of a nude or semi-nude version of the person in question.

Introducing DeepNude AI Technologies

DeepNude AI technologies have gained significant attention due to their ability to create realistic, high-quality images of people in various states of undress. These tools use advanced machine learning algorithms and convolutional neural networks (CNNs) to detect the human body's structure, skin tone, and clothing patterns in order to accurately generate nude or semi-nude images. Some popular DeepNude AI tools include OpenPose, SMPL, and Naked AIs.

Understanding the Technical Behind DeepNude and Undress AI

DeepNude and Undress AI technologies are based on a combination of image recognition, deep learning algorithms, and computer vision techniques. These tools work by first analyzing an input image featuring a clothed individual to identify key features such as the subject's body structure, clothing patterns, and skin tone. Once these elements have been identified, the AI technology generates a new image that closely resembles the original but with the person appearing undressed or nude. This process typically involves several steps, including pre-processing, feature extraction, model training, and post-processing to ensure a realistic output.

What is Stable Diffusion?

Stable Diffusion is a state-of-the-art AI image generation model that can create, modify, and enhance images based on text descriptions. It uses a latent diffusion process to generate high-quality images with remarkable detail and creativity.

Key Features

- • Text-to-image generation

- • Image-to-image transformation

- • Inpainting and outpainting

- • Style transfer and control

Use Cases

- • Digital art creation

- • Content design

- • Concept visualization

- • Photo editing

Introducing ComfyUI

ComfyUI is a powerful node-based interface for Stable Diffusion that offers unprecedented control and flexibility in the image generation process. It allows users to create complex workflows through a visual programming interface.

Advantages of ComfyUI

- • Visual workflow creation

- • Custom node development

- • Advanced parameter control

- • Workflow sharing and reuse

- • Real-time preview capabilities

Download undress AI workflow

Example Workflow

Example workflow downloads have been removed due to regulations.

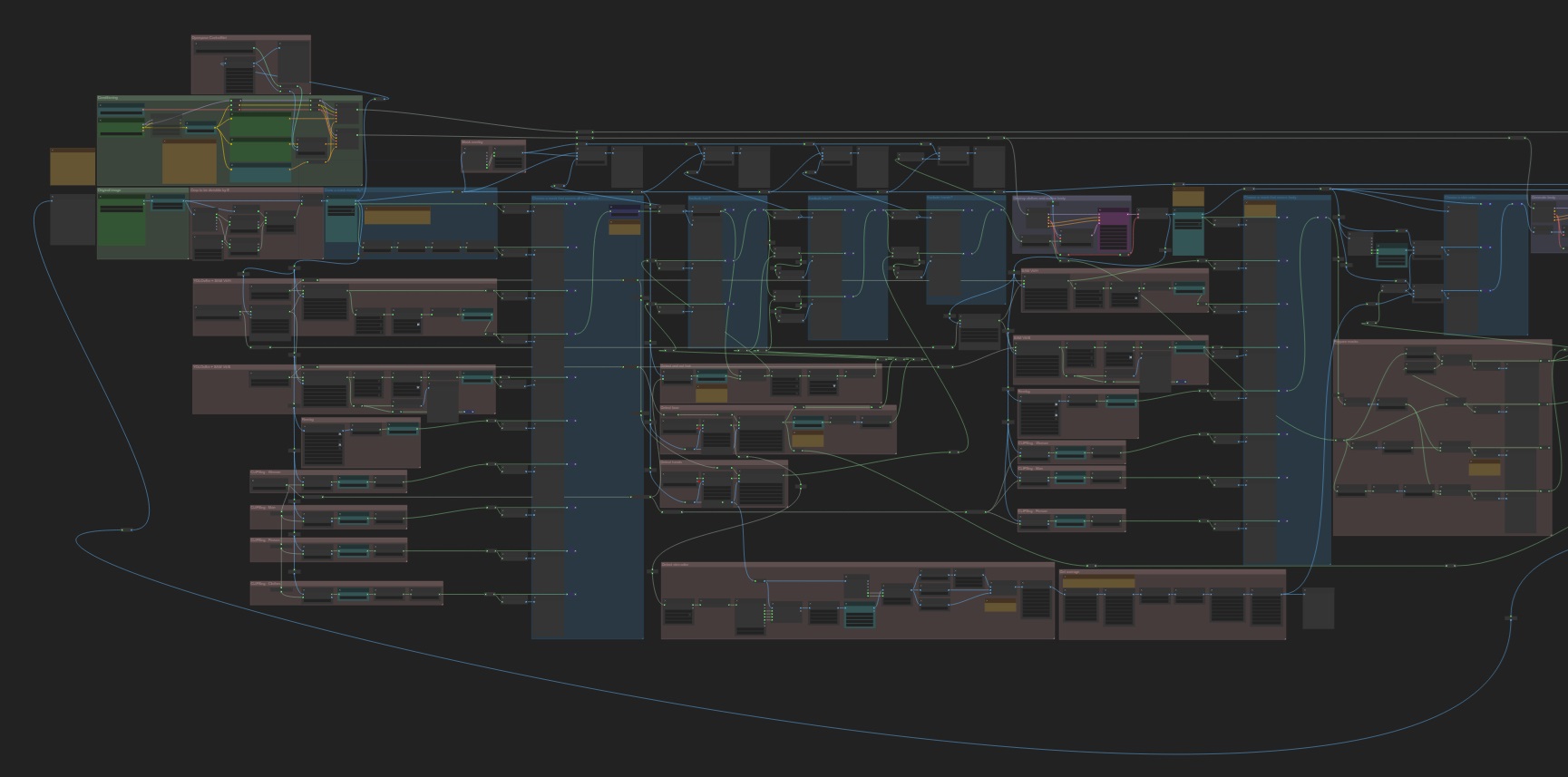

Snapshot of the workflow:

Understanding the Workflow

Creating images with Stable Diffusion through ComfyUI involves a systematic workflow that gives you precise control over the generation process.

1. Input Preparation

- • Write clear, detailed prompts describing your desired image

- • Prepare reference images if doing image-to-image generation

- • Select appropriate models and VAE

- • Configure basic parameters (dimensions, seed, etc.)

2. Node Setup in ComfyUI

- • Create a basic generation pipeline using essential nodes:

- KSampler

- CLIPTextEncode

- VAE Decode/Encode

- LoadCheckpoint

- • Connect nodes in the correct order

- • Add conditioning and control nodes as needed

3. Advanced Techniques

- • ControlNet for precise image control

- • LoRA integration for style adaptation

- • Upscaling and face restoration

- • Mask generation for inpainting

4. Optimization Tips

- • Use appropriate sampling methods (Euler a, DPM++ 2M Karras)

- • Adjust steps and CFG scale for quality control

- • Save successful workflows as templates

- • Experiment with different model merges

Pro Tips

- • Start with simple workflows and gradually add complexity

- • Document successful prompts and settings

- • Join the ComfyUI community to share and learn from others

- • Regular backup your custom nodes and workflows

Image Processing Workflow

Follow these steps to process images effectively using ComfyUI's powerful workflow system.

- Uploading input images: Upload your base image(s) or select from preloaded samples.

- Preparing the image: Adjust size and orientation as needed.

- Applying style prompts: Enter prompts for artistic direction (colors, textures, moods).

- Selecting a workflow: Choose from pre-defined workflows (CLIP, Reroute, etc.).

- Customizing the workflow: Modify node properties and connections.

- Refining the output: Adjust settings for skin tones, body types, and backgrounds.

- Reviewing and revising: Review and make final adjustments before export.